Local LLama LLM AI Chat Query Tool Chrome 插件, crx 扩展下载

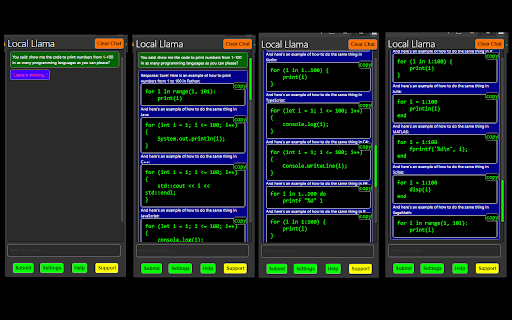

Query a local model from your browser.

Elevate your browsing experience with our cutting-edge Chrome extension, designed to seamlessly interact with local models hosted on your own server. This extension allows you to unlock the power of querying local models effortlessly and with precision, all from within your browser.

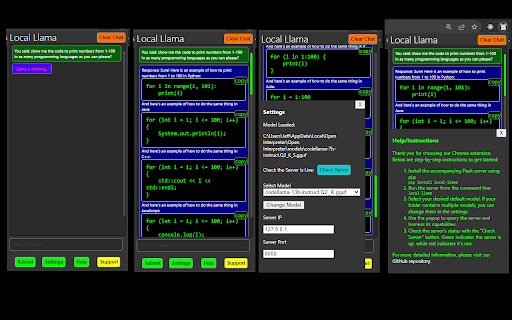

Our extension is fully compatible with both Llama CPP and .gguf models, providing you with a versatile solution for all your modeling needs. To get started, simply access our latest version, which includes a sample Llama CPP Flask server for your convenience. You can find this server on our GitHub repository:

GitHub Repository - Local Llama Chrome Extension:

https://github.com/mrdiamonddirt/local-llama-chrome-extension

To set up the server, install the server's pip package with the following command:

```

pip install local-llama

```

Then, just run:

```

local-llama

```

With just a few straightforward steps, you can harness the capabilities of this extension. Run the provided Python script, install the extension, and instantly gain the ability to effortlessly query your local models. Experience the future of browser-based model interactions today.

| 分类 | 📚教育 |

| 插件标识 | ekobbgdgkocdnnjoahoojakmoimfjlbm |

| 平台 | Chrome |

| 评分 |

☆☆☆☆☆

0

|

| 评分人数 | 2 |

| 插件主页 | https://chromewebstore.google.com/detail/local-llama-llm-ai-chat-q/ekobbgdgkocdnnjoahoojakmoimfjlbm |

| 版本号 | 1.0.6 |

| 大小 | 375KiB |

| 官网下载次数 | 139 |

| 下载地址 | |

| 更新时间 | 2023-10-02 00:00:00 |

CRX扩展文件安装方法

第1步: 打开Chrome浏览器的扩展程序

第2步:

在地址栏输入: chrome://extensions/

第3步: 开启右上角的【开发者模式】

第4步: 重启Chrome浏览器 (重要操作)

第5步: 重新打开扩展程序管理界面

第6步: 将下载的crx文件直接拖入页面完成安装

注意:请确保使用最新版本的Chrome浏览器

同类插件推荐

Llama 3.1 405b

? 与 Llama 3.1 405b 对话,获取即时答案!保存历史,享受与 AI 的无缝对话。??

Ollama Chrome API

Allow websites to access your locally running Olla

ChatLlama: Chat with AI

ChatLlama: Your AI Conversational Companion for Ch

Local LLama LLM AI Chat Query Tool

Query a local model from your browser.Elevate your

open-os LLM Browser Extension

Quick access to your favorite local LLM from your

Local LLM Helper

Interact with your local LLM server directly from

postpixie.com Chrome Extension

Enhance your social experience with postpixie.com

sidellama

sidellamaSidellama is a tiny browser-augmented cha

Offline AI Chat (Ollama)

Chat interface for your local Ollama AI models. Re

WebextLLM

Browser-native LLMs at your fingertipsUnleash the

Chatty for LLMs

Helps you to chat and RAG with your LLMs via ollam

smartGPT Multi-Tab Extension

A Chrome extension to interact with ChatGPT across

ReadAnything

Read anything with the power of AIAre you bothered